ResearchComputer Vision, Physical AI, Robotics — My research focuses on enabling robotic AI to operate in the physical world that learn new skills through physical experience and generalize across new cameras, embodiments, and scenarios. I pursue this through a few core pillars:

|

News

[2025] |

Publications (show selected / show all by date)(* indicates equal contribution, † indicates corresponding author or equal advising) |

|

Online Language Splatting

Saimouli Katragadda, Cho-Ying Wu, Yuliang Guo†, Xinyu Huang, Guoquan Huang, Liu Ren ICCV 2025 [paper] [project page] [code] [video] A fully online system to effectively integrate dense CLIP features with Gaussian Splatting. High-resolution dense CLIP embedding and online compressor learning modules are introduced to serve dense language mapping at realtime (40+ FPS) while retaining open-vocabulary capability for flexible query-based human-machine interaction. |

|

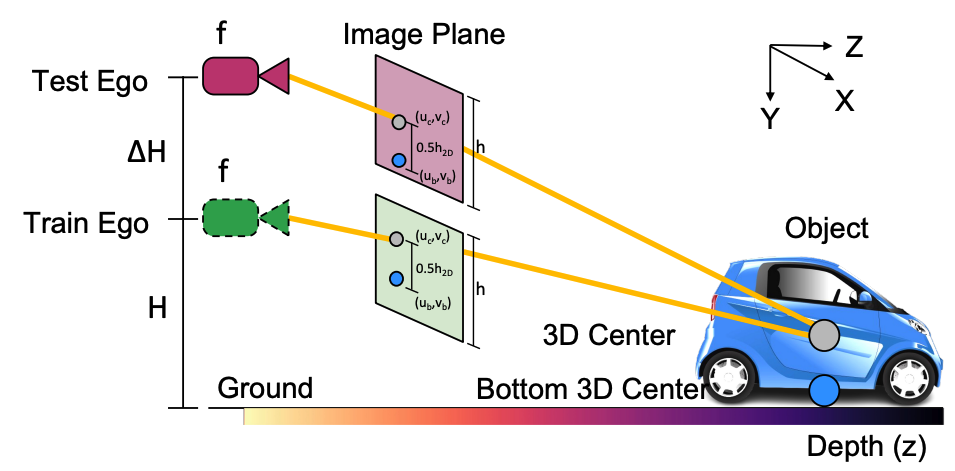

CHARM3R: Towards Unseen Camera Height Robust Monocular 3D Detector

Abhinav Kumar, Yuliang Guo, Zhihao Zhang, Xinyu Huang, Liu Ren, Xiaoming Liu ICCV 2025 [paper] [code] Mathematical derivation of consistent depth error trends under camera height variations in Mono3D models. A method generalizable across different camera heights via averaging ego-regressed and ground-based depth estimates. |

|

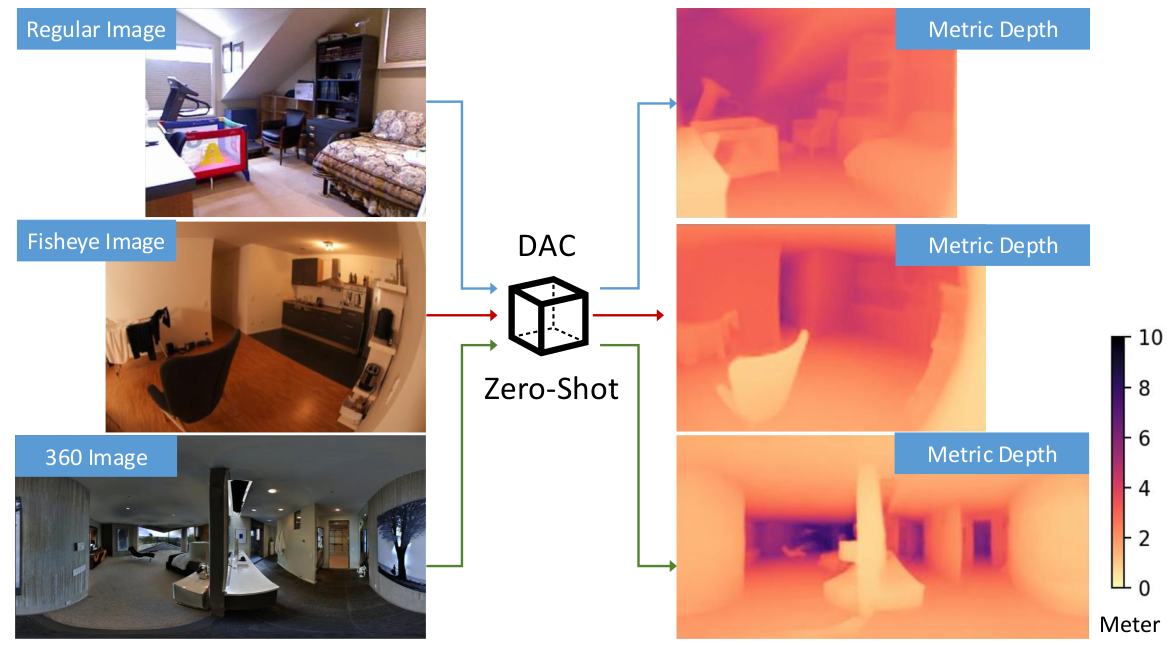

Depth Any Camera: Zero-Shot Metric Depth Estimation from Any Camera

Yuliang Guo†, Sparsh Garg, S. Mahdi H. Miangoleh, Xinyu Huang, Liu Ren CVPR 2025 [paper] [project page] [code] [video] Depth Any Camera (DAC) is a training framework for metric depth estimation that enables zero-shot generalization across cameras with diverse fields of view—including fisheye and 360° images. Tired of collecting new data for every camera setup? DAC maximizes the utility of existing 3D datasets, making them applicable to a wide range of camera types without the need for retraining. |

|

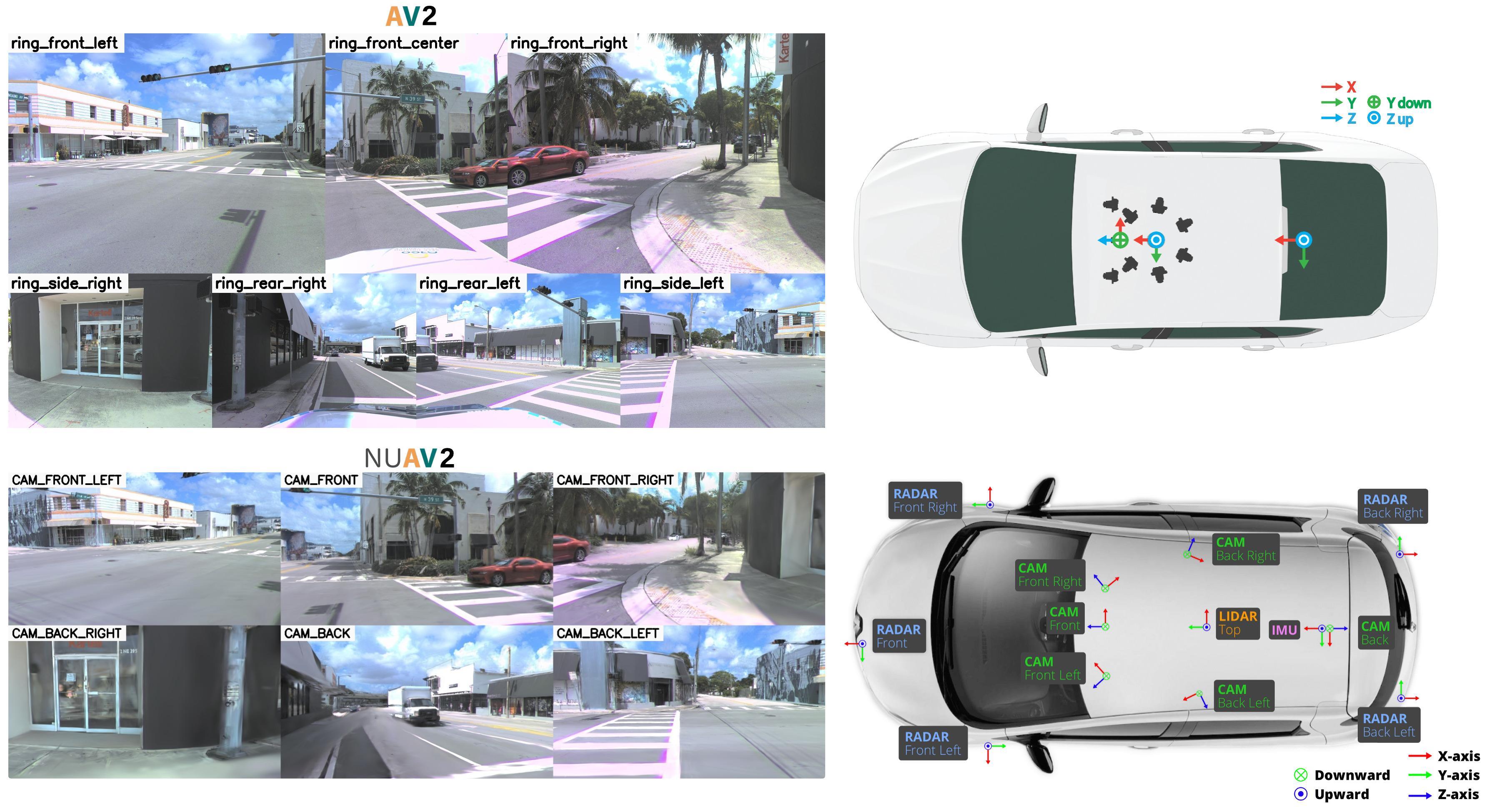

MapGS: Generalizable Pretraining and Data Augmentation for Online Mapping via Novel View Synthesis

Hengyuan Zhang, David Paz, Yuliang Guo, Xinyu Huang, Henrik I. Christensen, Liu Ren IEEE IV 2025 [paper] [project page] Using Argoverse 2 (AV2) sensor configurations, we employ Gaussian splatting to synthesize images in the nuScenes (NUSC) setup. We show that training online mapping algorithms from GS simulation helps cross-dataset generalization. |

|

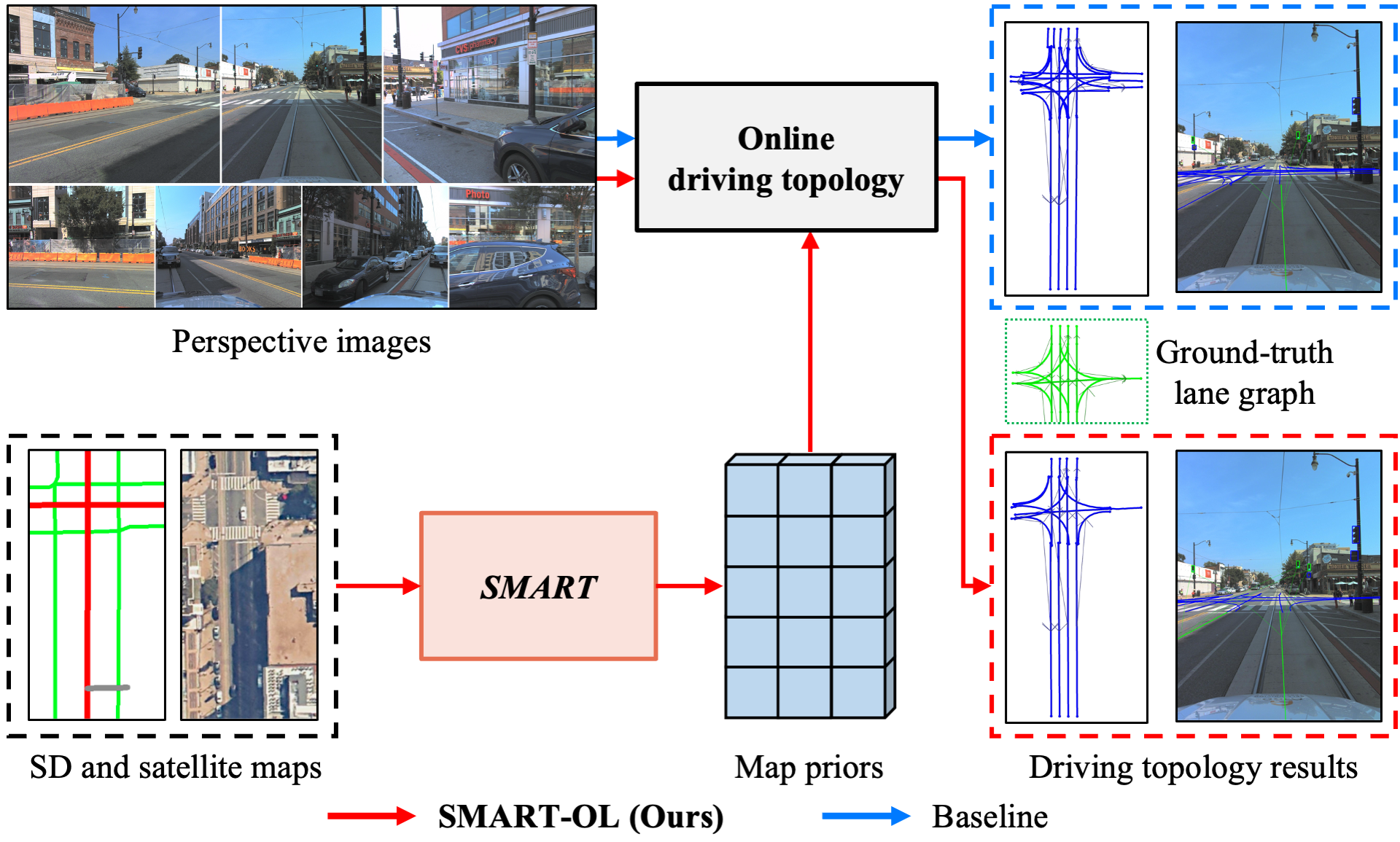

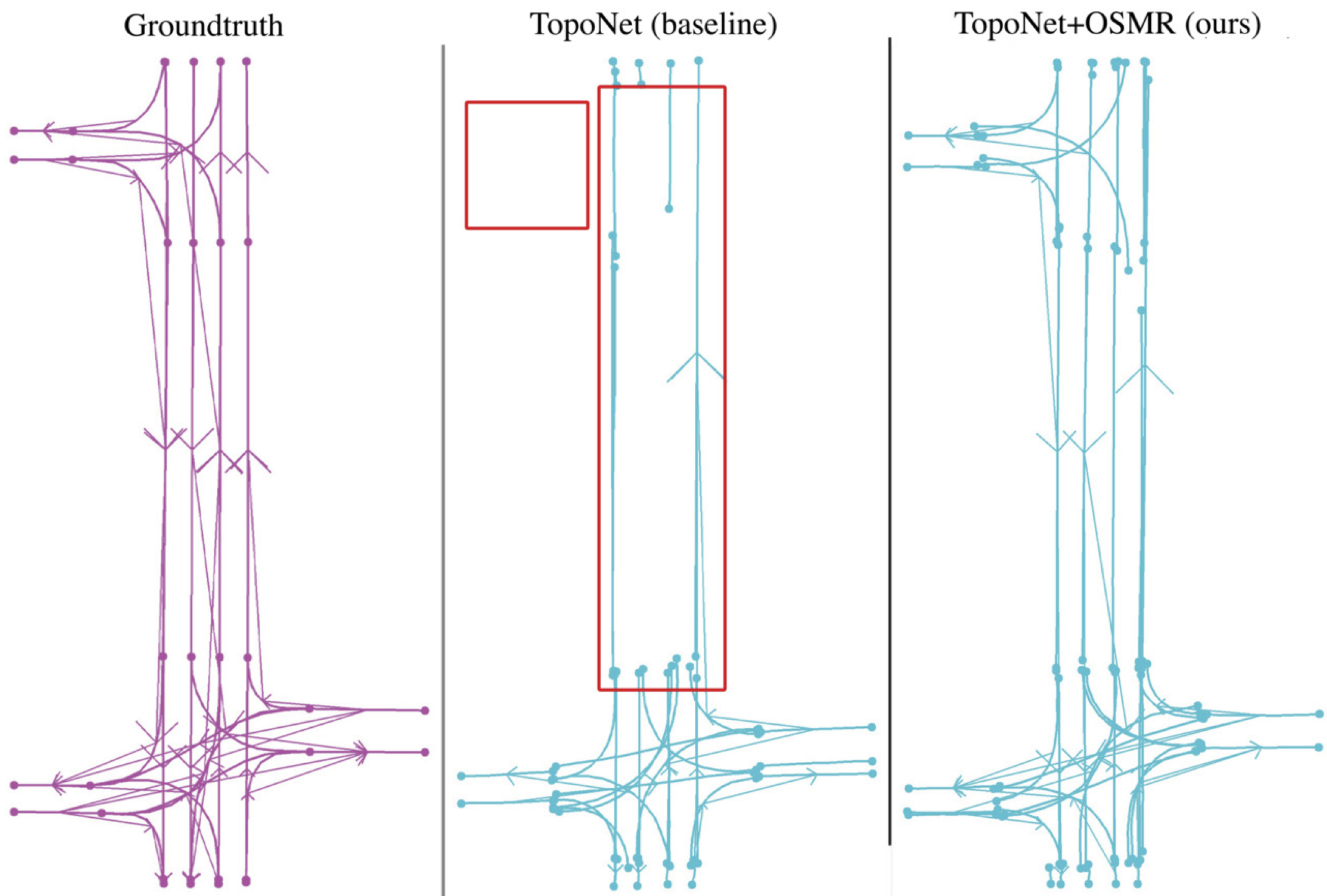

SMART: Advancing Scalable Map Priors for Driving Topology Reasoning

Junjie Ye, David Paz, Hengyuan Zhang, Yuliang Guo, Xinyu Huang, Henrik I. Christensen, Yue Wang, Liu Ren ICRA 2025 [paper] [project page] SMART augments online topology reasoning with robust map priors learned from scalable SD and satellite maps, substantially improving lane perception and topology reasoning. |

|

SUP-NeRF: A Streamlined Unification of Pose Estimation and NeRF for Monocular 3D Object Reconstruction

Yuliang Guo†, Abhinav Kumar, Cheng Zhao, Ruoyu Wang, Xinyu Huang, Liu Ren ECCV 2024 [paper] [project page] [code] [video] A monocular object reconstruction framework effectively integrating object pose estimation and NeRF-based reconstruction. A novel camera-invariant pose estimation module is introduced to resolve depth-scale ambiguity and enhance cross-domain generalization. |

|

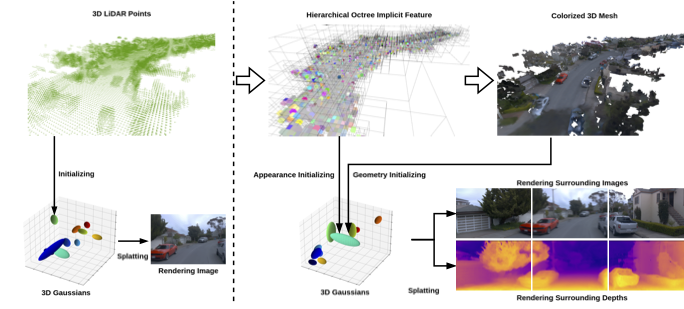

TCLC-GS: Tightly Coupled LiDAR-Camera Gaussian Splatting for Autonomous Driving

Cheng Zhao, Su Sun, Ruoyu Wang, Yuliang Guo, Jun-Jun Wan, Zhou Huang, Xinyu Huang, Yingjie Victor Chen, Liu Ren ECCV 2024 [paper] [video demo] An advanced Gaussian Splatting method effectively fusing Lidar and surrounding camera views for autonomous driving. The method uniquely leverages an intermediate occ-tree feature volume before GS such that GS parameters can be initialized from feature-volume-generated 3D surface more effectively. |

|

Enhancing Online Road Network Perception and Reasoning with Standard Definition Maps

Hengyuan Zhang, David Paz, Yuliang Guo, Arun Das, Xinyu Huang, Karsten Haug, Henrik I. Christensen, Liu Ren IROS 2024 [paper] [project page] An effective framework leveraging lightweight and scalable priors-Standard Definition (SD) maps in the estimation of online vectorized HD map representations. |

|

SeaBird: Segmentation in Bird’s View with Dice Loss Improves Monocular 3D Detection of Large Objects

Abhinav Kumar, Yuliang Guo, Xinyu Huang, Liu Ren, Xiaoming Liu CVPR 2024 [paper] [code] [project page] A mathematical framework to prove that the dice loss leads to superior noise-robustness and model convergence for large objects compared to regression losses. A flexible monocular 3D detection pipeline integrated with bird-eye view segmentation. |

|

Behind the Veil: Enhanced Indoor 3D Scene Reconstruction with Occluded Surfaces Completion

Su Sun, Cheng Zhao, Yuliang Guo, Ruoyu Wang, Xinyu Huang, Victor(Yingjie) Chen, Liu Ren CVPR 2024 [paper] [project page] A neural reconstruction method enabling the completion the occluded surfaces from large 3D scene reconstrucion. A milestone in automating the creation of interactable digital twins from real world. |

|

3D Copy-Paste: Physically-Plausible Object Insertion for Monocular 3D Detection

Yuhao Ge, Hong-Xing Yu, Cheng Zhao, Yuliang Guo, Xinyu Huang, Liu Ren, Laurent Itti, Jiajun Wu NeurIPS 2023 [paper] [code] [project page] A physically plausible indoor 3D object insertion approach to automatically "copy" virtual objects and "paste" them into real scenes. |

|

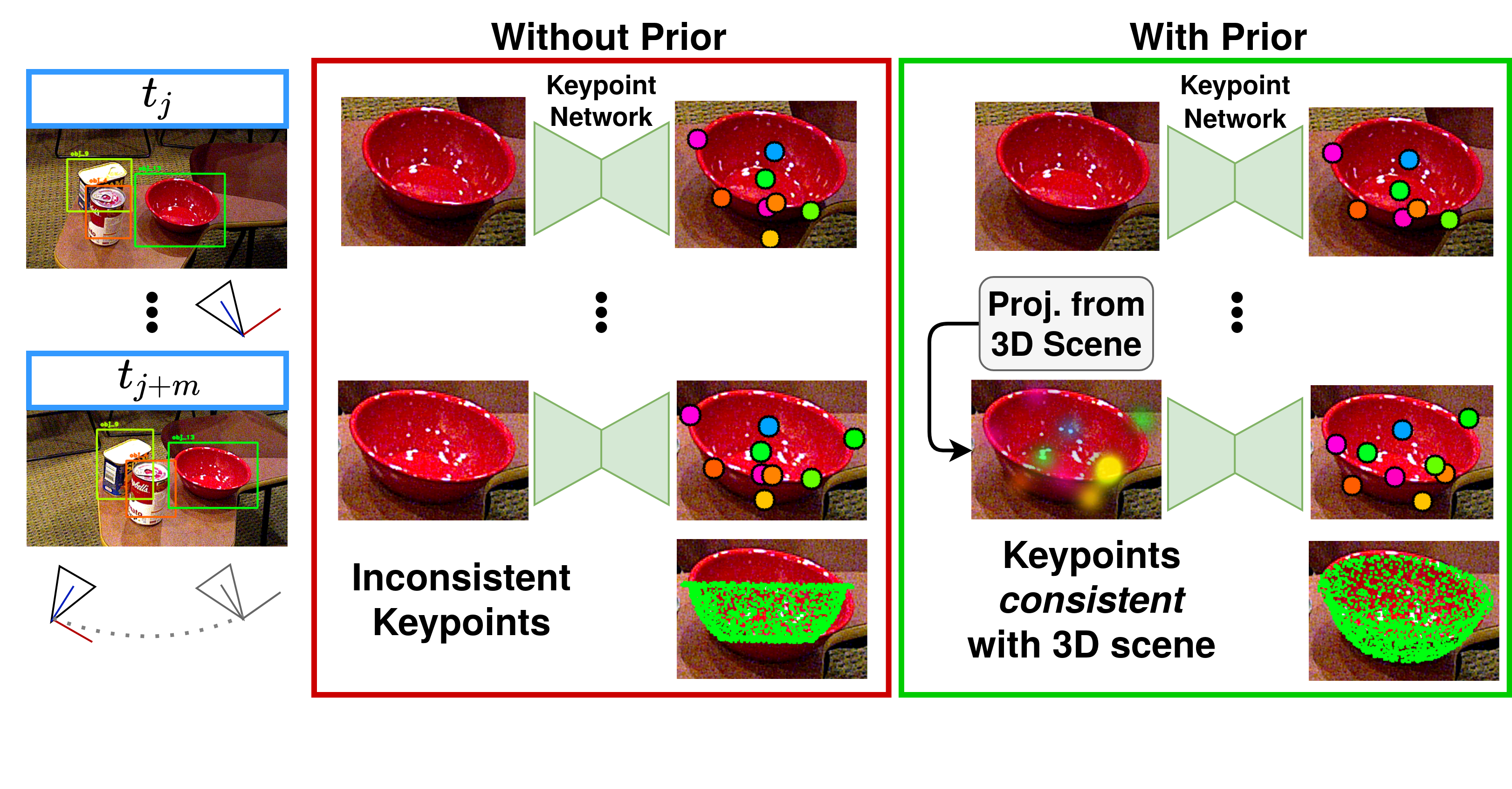

Symmetry and Uncertainty-Aware Object SLAM for 6DoF Object Pose Estimation

Nathaniel Merrill, Yuliang Guo, Xingxing Zuo, Xinyu Huang, Stefan Leutenegger, Xi Peng, Liu Ren, Guoquan Huang CVPR 2022 [paper] [code] A keypoint-based object-level SLAM framework that can provide globally consistent 6DoF pose estimates for symmetric and asymmetric objects. |

|

OmniFusion: 360 Monocular Depth Estimation via Geometry-Aware Fusion

Yuyan Li*, Yuliang Guo*, Zhixin Yan, Xinyu Huang, Ye Duan, Liu Ren CVPR 2022 (Oral Presentation) [paper] [code] The first vision transformer approach to handle 360 monocular depth estimation with spherical distortion. Novel designs include tangent-image coordinate embedding and geometry-aware feature fusion. |

|

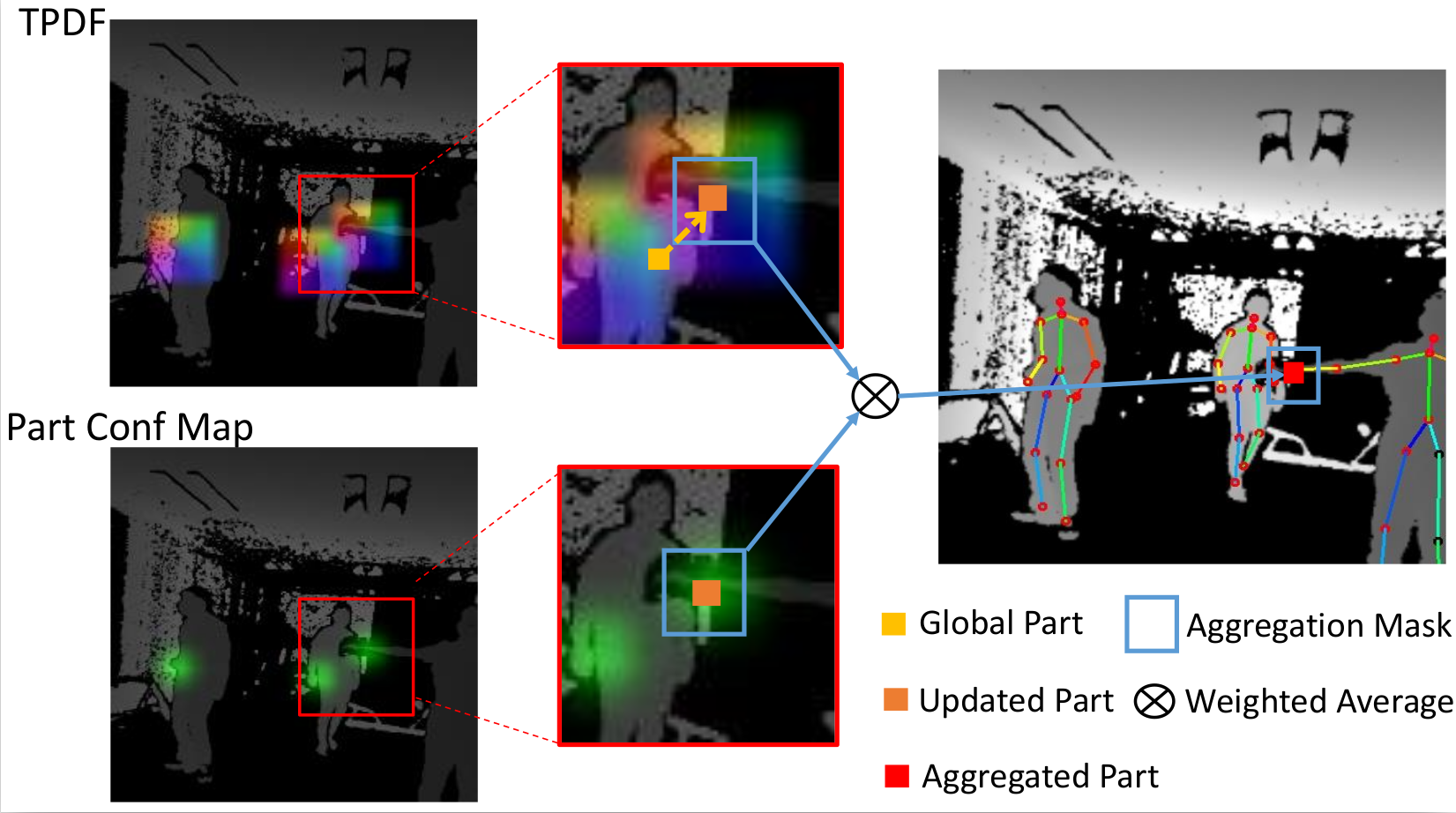

PoP-Net: Pose over Parts Network for Multi-Person 3D Pose Estimation from a Depth Image

Yuliang Guo, Zhong Li, Zekun Li, Xiangyu Du, Shuxue Quan, Yi Xu, WACV 2022 [paper] [code] [dataset] A real-time method to predict multi-person 3D poses from a depth image. Introduce new part-level representation to enables an explicit fusion process of bottom-up part detection and global pose detection. A new 3D human posture dataset with challenging multi-person occlusion. |

|

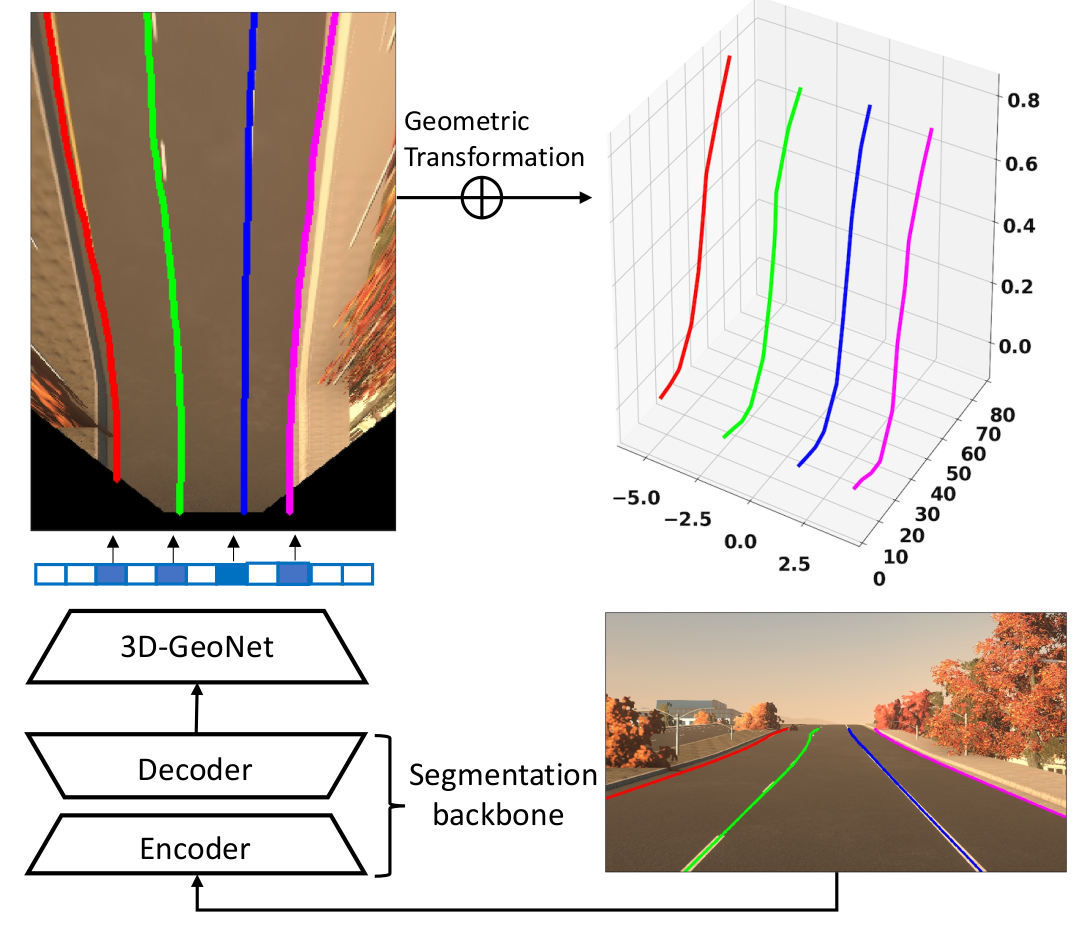

Gen-LaneNet: A Generalized and Scalable Approach for 3D Lane Detection

Yuliang Guo, Guang Chen, Peitao Zhao, Weide Zhang, Jinghao Miao, Jingao Wang, Tae Eun Choe ECCV 2020 [paper] [code] [dataset] A pioneer work in predicting 3D lanes from a single image with high generalization to novel scenes. A 3D lane synthetic dataset is introduced. |

|

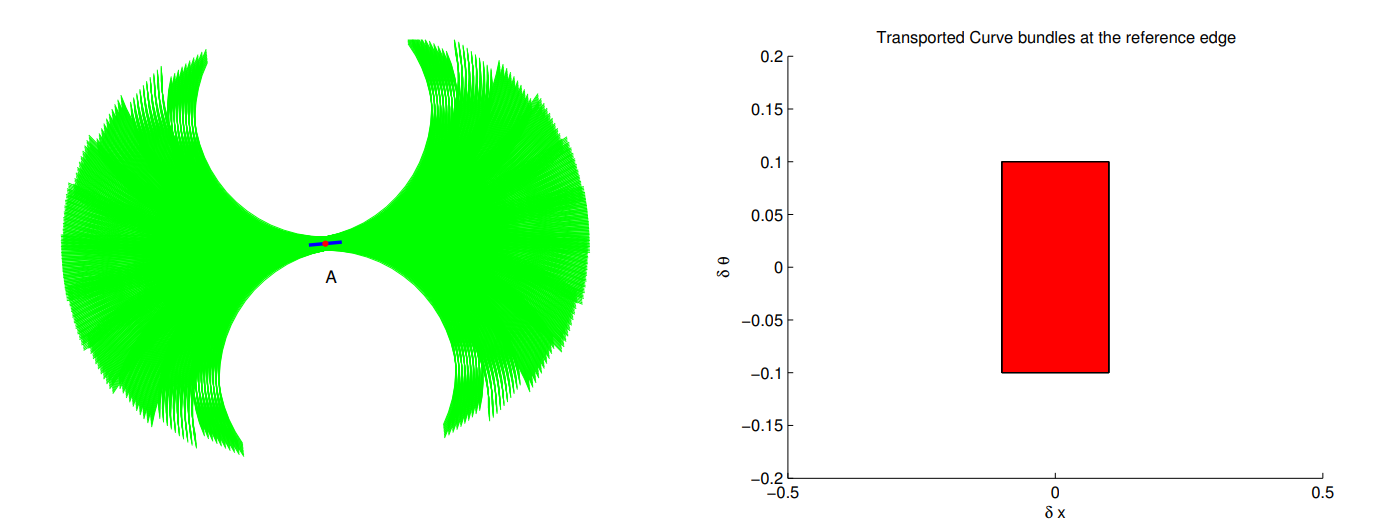

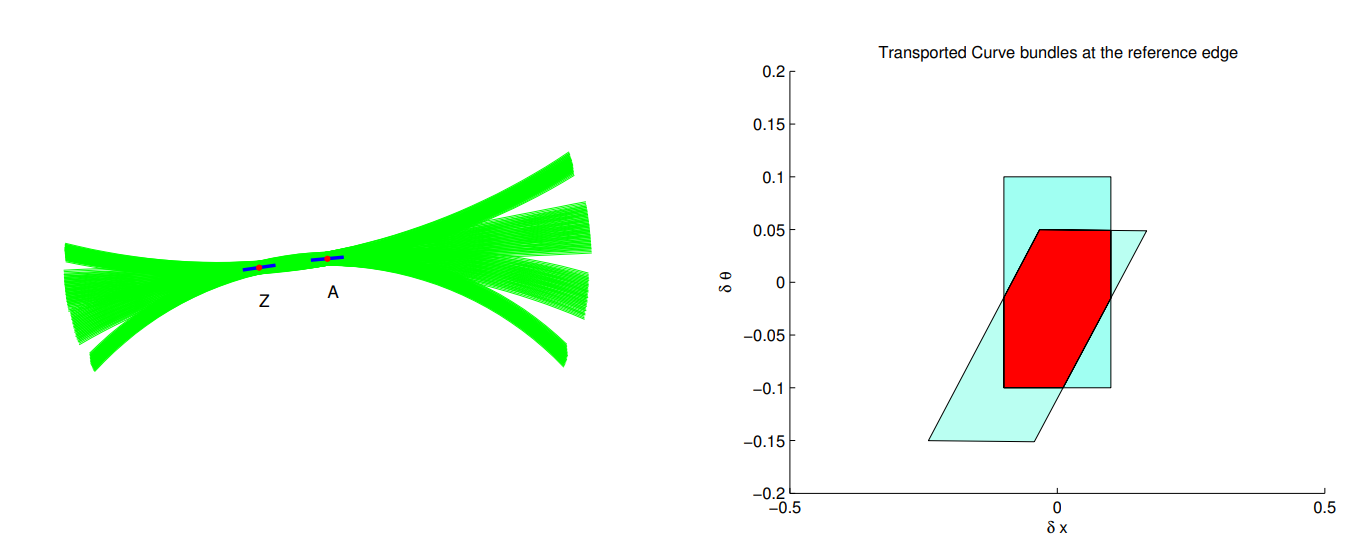

Differential Geometry in Edge Detection: Accurate Estimation of Position, Orientation and Curvature

Benjamin B. Kimia, Xiaoyan Li, Yuliang Guo, Amir Tamrakar TPAMI 2018 [paper] [code] [dataset] Numerically robust image filters and symbolic curve models are introduced to precisely estimate differential geometry attributes associated with image edges, including localization, orientation, curvature, edge topology. |

|

Robust Pose Tracking with a Joint Model of Appearance and Shape

Yuliang Guo, Lakshmi N. Govindarajan, Benjamin B. Kimia, Thomas Serre arXiv 2018 [paper] A joint model of learned part-based appearance and parametric shape representation to precisely estimate the highly articulated poses of multiple laboratory animals. |

|

BoMW: Bag of manifold words for one-shot learning gesture recognition from kinect

Lei Zhang, Shengping Zhang, Feng Jiang, Yuankai Qi, Jun Zhang, Yuliang Guo, Huiyu Zhou IEEE Transactions on Circuits and Systems for Video Technology 2017 [Paper] One-shot learning gesture recognition on RGB-D data recorded from Microsoft Kinect. A novel bag of manifold words (BoMW) based feature representation on sysmetric positive definite (SPD) manifolds. |

|

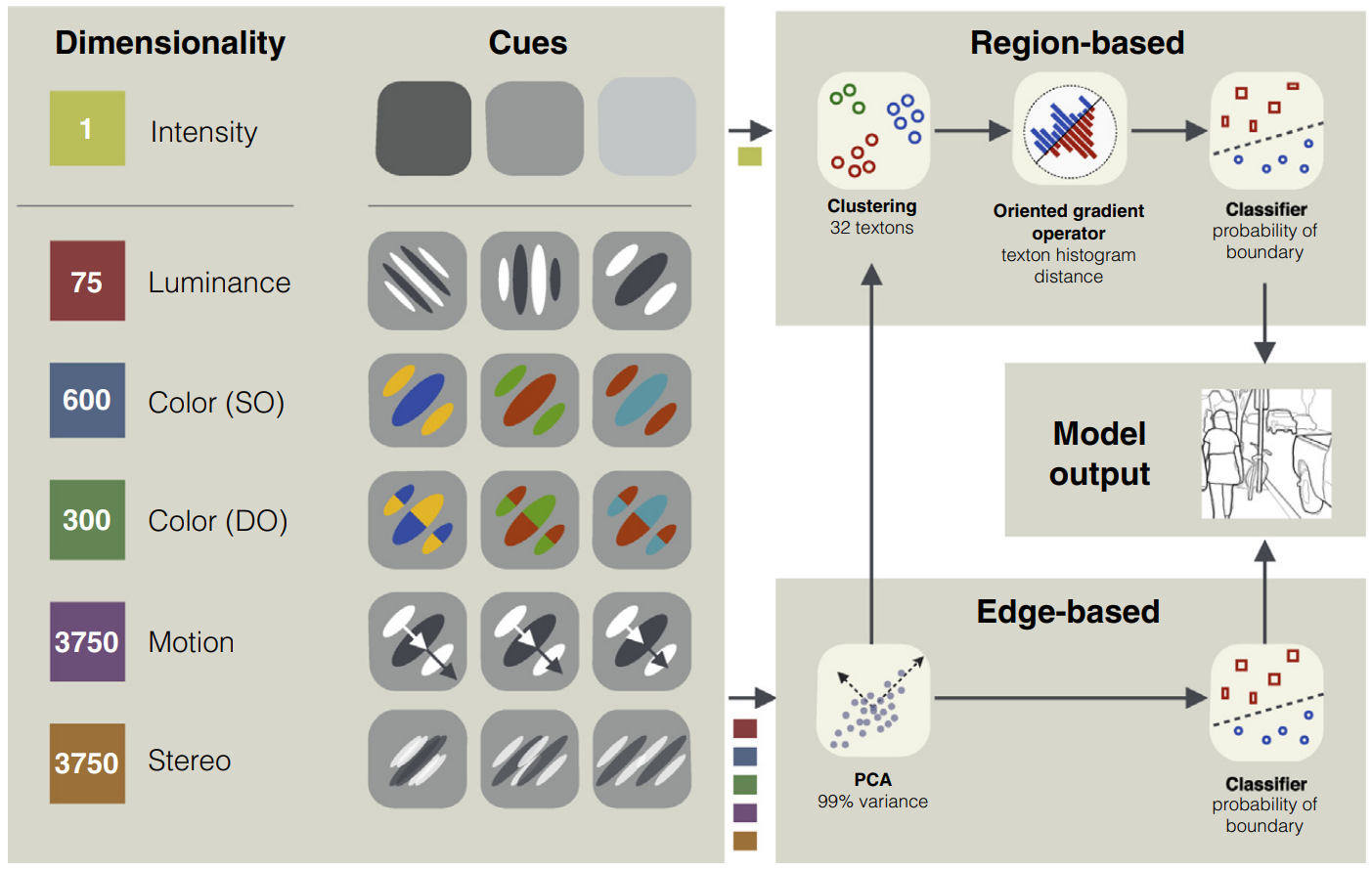

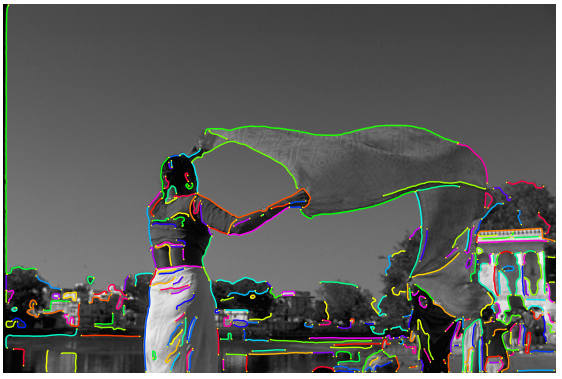

A Systematic Comparison between Visual Cues for Boundary Detection

David A. Mely, Junkyung Kim, Mason McGill, Yuliang Guo, Thomas Serre Vision Research 2016 [paper] [dataset] This study investigates the relative diagnosticity and the optimal combination of multiple cues (we consider luminance, color, motion and binocular disparity) for boundary detection in natural scenes. A multi-cue boundary dataset is introduced to facilitate the study. |

|

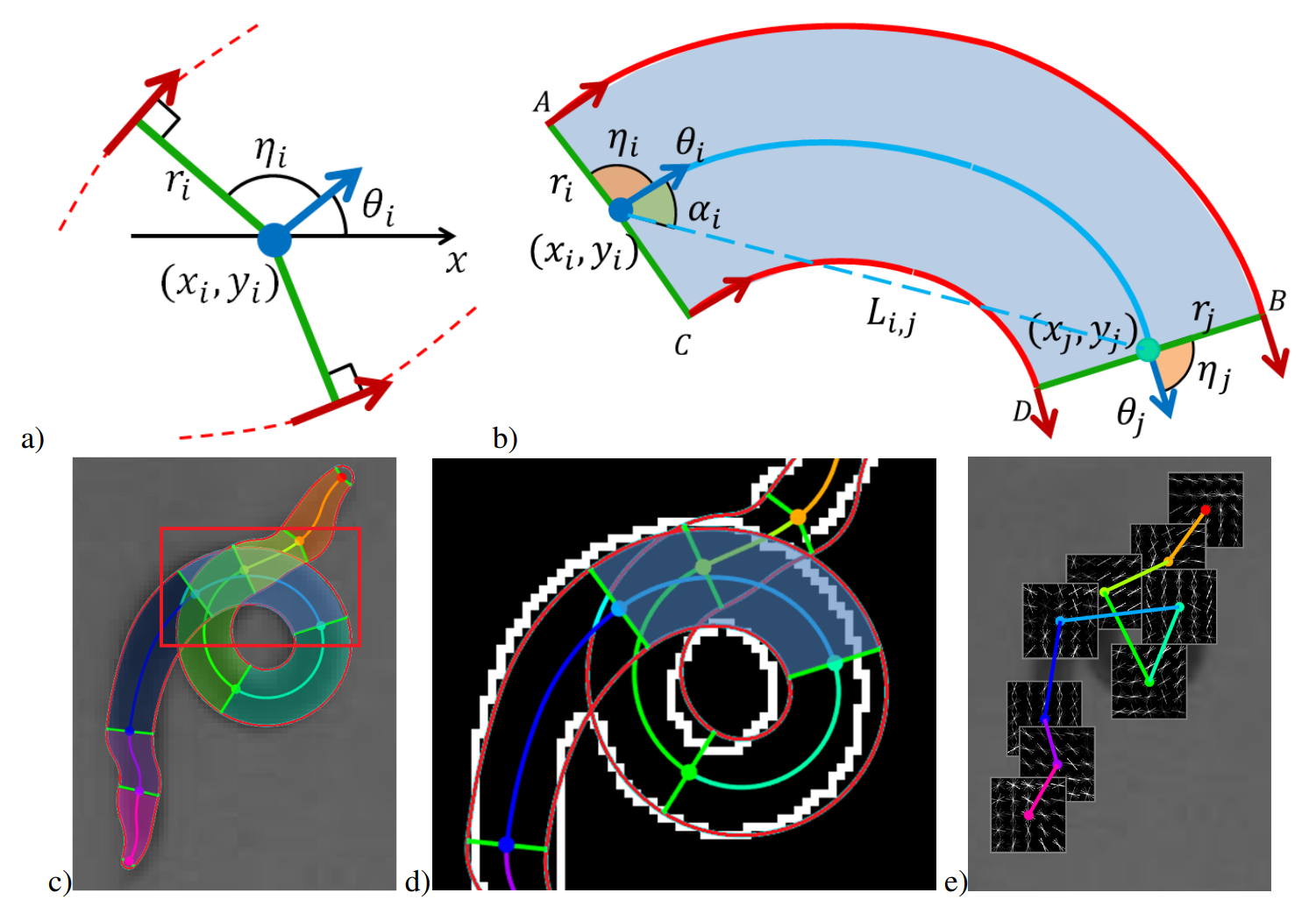

A Multi-Stage Approach to Curve Extraction

Yuliang Guo, Naman Kumar, Maruthi Narayanan, Benjamin B Kimia ECCV 2014 [paper] [code] A multi-stage approach to curve extraction where the curve fragment search space is iteratively reduced by removing unlikely candidates using geometric constrains, but without affecting recall, to a point where the application of an objective functional becomes appropriate. |

Industrial Impact

|

Selected Patents

|